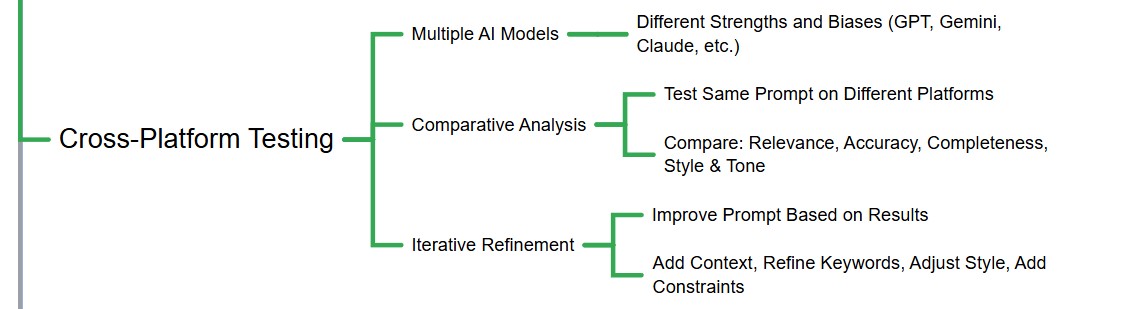

Cross-Platform Testing

Different AI models (like OpenAI's GPT, Google's Gemini, Anthropic's Claude,

Microsoft Copilot, Mistral, DeepSeek and others) have unique strengths and biases. A prompt that works well on one may fail on another. Cross-platform submission involves testing your prompt across multiple models and comparing the results. This iterative process allows you to refine your prompt for optimal performance and identify potential model biases.

Key aspects to compare:

- - Relevance

- - Accuracy

- - Completeness

- - Style & Tone

- Refine your prompt iteratively based on the comparative analysis. This might include adding more context, refining keywording, adjusting styles or including constraints.